C++ 网页爬虫【获取网页 HTML 源码】

[編輯] [转简体] (简体译文)概要

爬虫,可以爬取网页信息

正文

2021-8-9 补充:

首先我得说一下:垃圾CSDN

太垃圾了。

CSDN 上那么多关于 C++ 获取网页数据的代码,几乎全都是雷同的

你说离谱不离谱?看来看去就是那几段代码在重复。更离谱的是,有的人复制都没复制全,代码都运行不起来

真的是浪费时间。

最后总算是找到能用的了。

由于网上有好多是用 MFC 的库做的,但是对于 Console 项目来说用不了,所以我找了另外几种解决方案。

下面这些代码我都是改过的,调整了一下参数和返回值,用起来更方便。

1 -- 使用<wininet.h>系列函数

不多说上代码,VS2019 运行通过,以下是完整代码,可以直接运行。

里面用到了 utf8 和 gbk 编码转换的函数,见 http://huidong.xyz/index.php?mode=2&id=298

有的网页可能获取不到,原因不详,但是属于少数吧。

建议:可以将下面的代码中的 main 函数和 stdio.h 的引用删除,打包成 web.h。

#include <windows.h>

#include <wininet.h>

#include <stdio.h>

#include <string>

using namespace std;

#pragma comment(lib, "wininet.lib")

/**

* @brief UTF-8 编码字符串转 GBK 编码字符串

* @param[in] lpUTF8Str: 原 utf-8 字符串

* @param[out] lpGBKStr: 转码后的 gbk 字符串

* @param[in] nGBKStrLen: gbk 字符串的最大长度

* @return 返回转换后字符串的长度

* @note 代码来自 https://www.cnblogs.com/zhongbin/p/3160641.html

*/

int UTF8ToGBK(char* lpUTF8Str, char* lpGBKStr, int nGBKStrLen)

{

wchar_t* lpUnicodeStr = NULL;

int nRetLen = 0;

if (!lpUTF8Str) return 0;

nRetLen = ::MultiByteToWideChar(CP_UTF8, 0, (char*)lpUTF8Str, -1, NULL, NULL);

lpUnicodeStr = new WCHAR[nRetLen + 1];

nRetLen = ::MultiByteToWideChar(CP_UTF8, 0, (char*)lpUTF8Str, -1, lpUnicodeStr, nRetLen);

if (!nRetLen) return 0;

nRetLen = ::WideCharToMultiByte(CP_ACP, 0, lpUnicodeStr, -1, NULL, NULL, NULL, NULL);

if (!lpGBKStr)

{

if (lpUnicodeStr) delete[] lpUnicodeStr;

return nRetLen;

}

if (nGBKStrLen < nRetLen)

{

if (lpUnicodeStr) delete[] lpUnicodeStr;

return 0;

}

nRetLen = ::WideCharToMultiByte(CP_ACP, 0, lpUnicodeStr, -1, (char*)lpGBKStr, nRetLen, NULL, NULL);

if (lpUnicodeStr) delete[] lpUnicodeStr;

return nRetLen;

}

/**

* @brief 判断一个字符串是否为 UTF-8 编码

* @note 来自 https://blog.csdn.net/jiankekejian/article/details/106720432 (有删改)

*/

bool isUTF8(const char* str)

{

int length = strlen(str);

int check_sub = 0;

int i = 0;

int j = 0;

for (i = 0; i < length; i++)

{

if (check_sub == 0)

{

if ((str[i] >> 7) == 0)

{

continue;

}

struct

{

int cal;

int cmp;

} Utf8NumMap[] = { {0xE0,0xC0},{0xF0,0xE0},{0xF8,0xF0},{0xFC,0xF8},{0xFE,0xFC}, };

for (j = 0; j < (sizeof(Utf8NumMap) / sizeof(Utf8NumMap[0])); j++)

{

if ((str[i] & Utf8NumMap[j].cal) == Utf8NumMap[j].cmp)

{

check_sub = j + 1;

break;

}

}

if (0 == check_sub)

{

return false;

}

}

else

{

if ((str[i] & 0xC0) != 0x80)

{

return false;

}

check_sub--;

}

}

return true;

}

/**

* @brief 获取网页源码

* @param[in] Url 网页链接

* @param[in] 是否强制转换为 GBK 编码

* @return 返回网页源码

* @note 代码来自 https://www.cnblogs.com/croot/p/3391003.html (有删改)

*/

string GetWebSrcCode(LPCTSTR Url, bool isGBK = true)

{

string strHTML;

HINTERNET hSession = InternetOpen(L"IE6.0", INTERNET_OPEN_TYPE_PRECONFIG, NULL, NULL, 0);

if (hSession != NULL)

{

HINTERNET hURL = InternetOpenUrl(hSession, Url, NULL, 0, INTERNET_FLAG_DONT_CACHE, 0);

if (hURL != NULL)

{

const int nBlockSize = 1024;

char Temp[nBlockSize] = { 0 };

ULONG Number = 1;

while (Number > 0)

{

InternetReadFile(hURL, Temp, nBlockSize - 1, &Number);

for (int i = 0; i < (int)Number; i++)

strHTML += Temp[i];

}

InternetCloseHandle(hURL);

hURL = NULL;

}

InternetCloseHandle(hSession);

hSession = NULL;

}

if (isGBK && isUTF8(strHTML.c_str()))

{

string strGBK;

strGBK.resize(strHTML.size() * 2);

UTF8ToGBK(&strHTML[0], &strGBK[0], strHTML.size() * 2);

strGBK.resize(strlen(strGBK.c_str())); // 删除多余 \0

return strGBK;

}

else

{

return strHTML;

}

}

int main(int argc, TCHAR* argv[])

{

string str = GetWebSrcCode(L"http://www.huidong.xyz/");

printf("%s", str.c_str());

return 0;

}2 -- 使用 win socket

来自 http://www.vcgood.com/archives/3759

和上面第一种一样,都使用了 utf8 和 gbk 的转换函数,见 http://huidong.xyz/index.php?mode=2&id=298

VS2019 实测可用

缺点:测试发现不能爬取的网站比第一种要多,比如 cnblogs.com 就爬不了。但是 www.baidu.com 什么的可以。

#include <stdio.h>

#include <winsock.h>

#include <string>

#pragma comment(lib, "ws2_32.lib")

using namespace std;

/**

* @brief UTF-8 编码字符串转 GBK 编码字符串

* @param[in] lpUTF8Str: 原 utf-8 字符串

* @param[out] lpGBKStr: 转码后的 gbk 字符串

* @param[in] nGBKStrLen: gbk 字符串的最大长度

* @return 返回转换后字符串的长度

* @note 代码来自 https://www.cnblogs.com/zhongbin/p/3160641.html

*/

int UTF8ToGBK(char* lpUTF8Str, char* lpGBKStr, int nGBKStrLen)

{

wchar_t* lpUnicodeStr = NULL;

int nRetLen = 0;

if (!lpUTF8Str) return 0;

nRetLen = ::MultiByteToWideChar(CP_UTF8, 0, (char*)lpUTF8Str, -1, NULL, NULL);

lpUnicodeStr = new WCHAR[nRetLen + 1];

nRetLen = ::MultiByteToWideChar(CP_UTF8, 0, (char*)lpUTF8Str, -1, lpUnicodeStr, nRetLen);

if (!nRetLen) return 0;

nRetLen = ::WideCharToMultiByte(CP_ACP, 0, lpUnicodeStr, -1, NULL, NULL, NULL, NULL);

if (!lpGBKStr)

{

if (lpUnicodeStr) delete[] lpUnicodeStr;

return nRetLen;

}

if (nGBKStrLen < nRetLen)

{

if (lpUnicodeStr) delete[] lpUnicodeStr;

return 0;

}

nRetLen = ::WideCharToMultiByte(CP_ACP, 0, lpUnicodeStr, -1, (char*)lpGBKStr, nRetLen, NULL, NULL);

if (lpUnicodeStr) delete[] lpUnicodeStr;

return nRetLen;

}

/**

* @brief 判断一个字符串是否为 UTF-8 编码

* @note 来自 https://blog.csdn.net/jiankekejian/article/details/106720432 (有删改)

*/

bool isUTF8(const char* str)

{

int length = strlen(str);

int check_sub = 0;

int i = 0;

int j = 0;

for (i = 0; i < length; i++)

{

if (check_sub == 0)

{

if ((str[i] >> 7) == 0)

{

continue;

}

struct

{

int cal;

int cmp;

} Utf8NumMap[] = { {0xE0,0xC0},{0xF0,0xE0},{0xF8,0xF0},{0xFC,0xF8},{0xFE,0xFC}, };

for (j = 0; j < (sizeof(Utf8NumMap) / sizeof(Utf8NumMap[0])); j++)

{

if ((str[i] & Utf8NumMap[j].cal) == Utf8NumMap[j].cmp)

{

check_sub = j + 1;

break;

}

}

if (0 == check_sub)

{

return false;

}

}

else

{

if ((str[i] & 0xC0) != 0x80)

{

return false;

}

check_sub--;

}

}

return true;

}

/**

* @brief 获取网页 HTML 源码

* @param[in] url: 网址

* @return 返回网页源码

* @note 代码来自 http://www.vcgood.com/archives/3759 (有删改)

*/

string geturl(const char* url)

{

string strHTML;

WSADATA WSAData = { 0 };

SOCKET sockfd;

struct sockaddr_in addr;

struct hostent* pURL;

char myurl[BUFSIZ];

char* pHost = 0, * pGET = 0;

char host[BUFSIZ], GET[BUFSIZ];

char header[BUFSIZ] = "";

int text_buf_size = 409600;

char* text = new char[text_buf_size];

memset(text, 0, text_buf_size);

if (WSAStartup(MAKEWORD(2, 2), &WSAData))

{

printf("WSA failed\n");

return {};

}

strcpy_s(myurl, 512, url);

for (pHost = myurl; *pHost != '/' && *pHost != '\0'; ++pHost);

if ((int)(pHost - myurl) == strlen(myurl))

strcpy_s(GET, 512, "/");

else

strcpy_s(GET, 512, pHost);

*pHost = '\0';

strcpy_s(host, 512, myurl);

sockfd = socket(PF_INET, SOCK_STREAM, IPPROTO_TCP);

pURL = gethostbyname(host);

addr.sin_family = AF_INET;

addr.sin_addr.s_addr = *((unsigned long*)pURL->h_addr);

addr.sin_port = htons(80);

strcat_s(header, 512, "GET ");

strcat_s(header, 512, GET);

strcat_s(header, 512, " HTTP/1.1\r\n");

strcat_s(header, 512, "HOST: ");

strcat_s(header, 512, host);

strcat_s(header, 512, "\r\nConnection: Close\r\n\r\n");

connect(sockfd, (SOCKADDR*)&addr, sizeof(addr));

send(sockfd, header, strlen(header), 0);

while (recv(sockfd, text, BUFSIZ, 0) > 0)

{

strHTML += text;

memset(text, 0, text_buf_size);

}

closesocket(sockfd);

WSACleanup();

delete[] text;

if (isUTF8(strHTML.c_str()))

{

string strGBK;

strGBK.resize(strHTML.size()*2);

UTF8ToGBK(&strHTML[0], &strGBK[0], strHTML.size() * 2);

return strGBK;

}

else

{

return strHTML;

}

}

int main()

{

char url[256] = { 0 };

printf("http://");

scanf_s("%s", url, 256);

string strHTML = geturl(url);

printf("%s", strHTML.c_str());

return 0;

}------- 新增内容结束 ---------

这个是之前 ckj 写的,但是现在好像有点问题了:

代码:

#include <string.h>

#include <iostream>

#include <windows.h>

#include <sstream>

#pragma comment(lib,"ws2_32.lib")

using namespace std;

const int recvbuf_size = 204800000;

char recvbuf[recvbuf_size] = { 0 };

// 返回一个网页的HTML内容

// url 网页链接

string getweb(string url)

{

string ym, src;

if (url.find("http://") != string::npos)

{

if (url.find("/", 8) != string::npos)

{

ym = url.substr(7, url.find("/", 8) - 7);

src = url.substr(url.find("/", 8));

}

else

{

ym = url.substr(7);

src = "";

}

}

if (url.find("https://") != string::npos)

{

if (url.find("/", 9) != string::npos)

{

ym = url.substr(8, url.find("/", 9) - 8);

src = url.substr(url.find("/", 9));

}

else

{

ym = url.substr(8);

src = "";

}

}

SOCKET sever;

sockaddr_in severaddr;

WSADATA wsadata;

WSAStartup(MAKEWORD(2, 2), &wsadata);

sever = socket(PF_INET, SOCK_STREAM, 0);

memset(&severaddr, 0, sizeof(severaddr));

HOSTENT* host = (HOSTENT*)gethostbyname(ym.c_str());

severaddr.sin_family = AF_INET;

severaddr.sin_addr.S_un.S_addr = inet_addr(inet_ntoa(*(struct in_addr*)host->h_addr_list[0]));

severaddr.sin_port = htons(80);

connect(sever, (SOCKADDR*)&severaddr, sizeof(severaddr));

stringstream stream;

stream << "GET " << src << " HTTP/1.1\r\n";

stream << "Host: " << ym << "\r\n";

stream << "Connection: keep-alive\r\n";

stream << "User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.80 Safari/537.36 Edg/86.0.622.48\r\n";

stream << "Accept - Encoding : gzip, deflate, br\r\n";

stream << "Accept - Language : zh - CN, zh; q = 0.9, en; q = 0.8, en - GB; q = 0.7, en - US; q = 0.6\r\n\r\n";

char sendbuf[2048];

memset(sendbuf, 0, sizeof(sendbuf));

stream.read(sendbuf, stream.tellp());

send(sever, sendbuf, sizeof(sendbuf), 0);

//cout << sendbuf << endl;

memset(recvbuf, 0, recvbuf_size);

recv(sever, recvbuf, recvbuf_size, 0);

//cout << recvbuf << endl;

string strrecv = recvbuf;

return strrecv;

}

int main()

{

// 爬取huidong网的首页

string web = getweb("http://www.huidong.xyz/index.php");

printf(web.c_str());

while(true);

return 0;

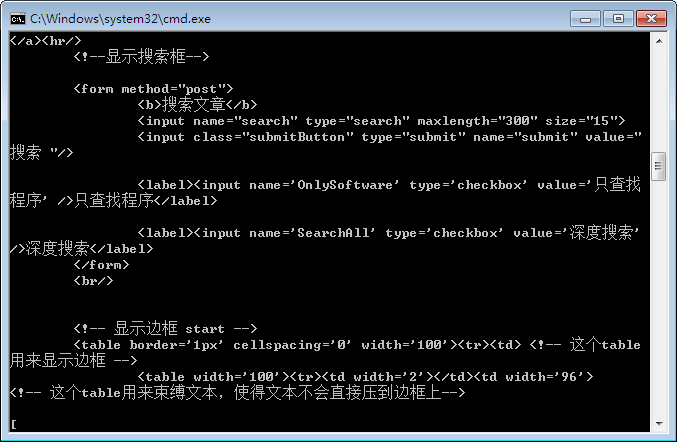

}效果:

我发现如果把recvbuf改成局部变量,用new来分配内存的话获取到的网页内容就不太全,暂时未知原因。

有的网站爬不到,应该是请求头有问题。